Transcript

Let’s ask a more fundamental, basic question. Were the actions of setting up covert photo-taking kiosks or relying on this wide-scale facial recognition technology reasonable?

On the one hand, although there might be one person in the crowd who may pose a threat to Taylor Swift, or even the community, there are 59,999 other people who are not posing any threat, yet having their face scanned, information about their location, preferences, etc. being recorded.

On the other hand, what are the real costs for somebody in that audience who is not a criminal or not a threat to Taylor Swift? At the end of the day, it sounds like their privacy is actually still being preserved, right?

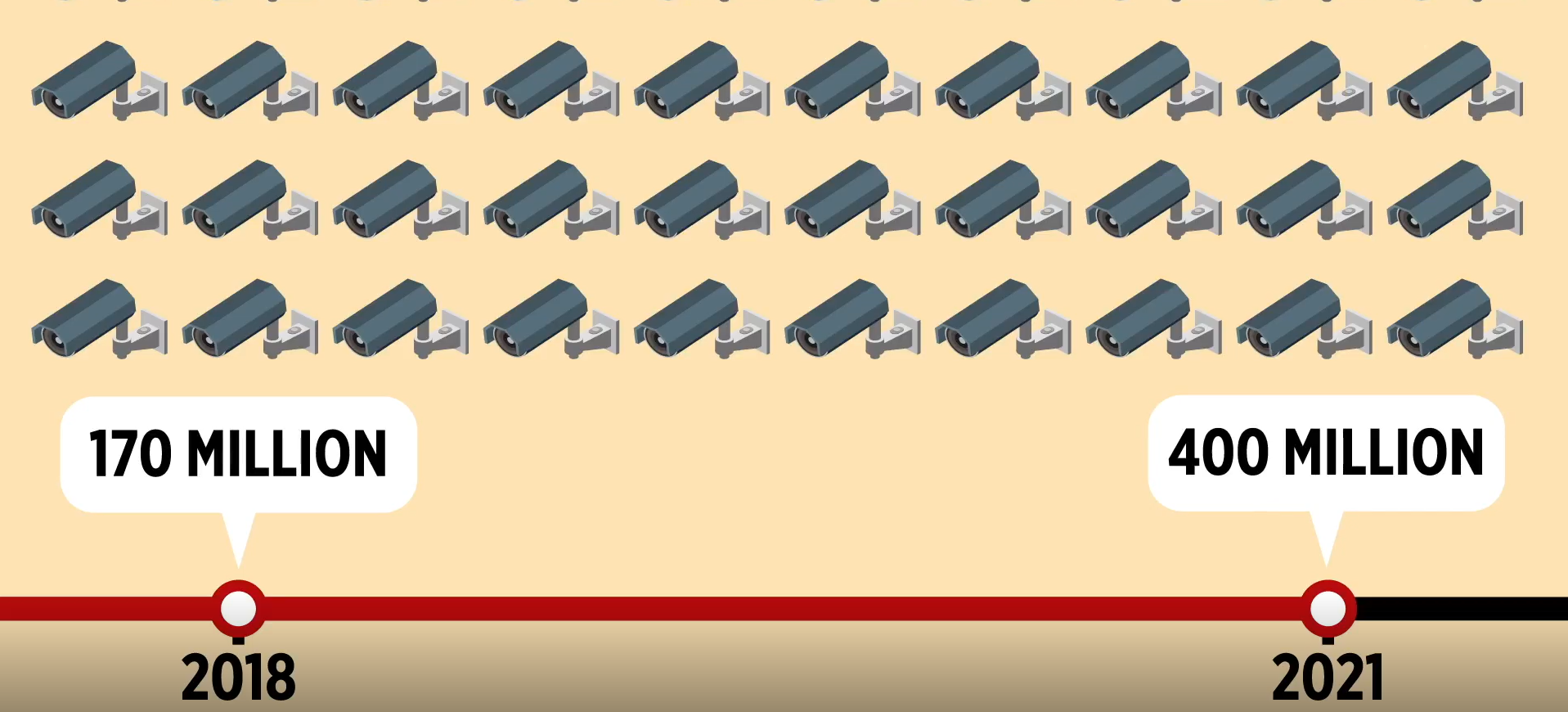

The thing is whether we recognise that or not, we are under constant surveillance. You could say that everyone else’s privacy wasn’t really violated. But what happens when they are not looking for criminals? What happens if they’re looking for someone based on ideology? What happens if it’s not a benevolent government that’s utilising that technology? And what happens if it’s not a government at all?

It really doesn’t require that much imagination to concoct a scenario where an individual, a large company, or even a government could utilise these types of technologies to single out people and potentially cause them very significant personal harm.

Call us old-fashioned, but we are concerned that consumers are actually choosing this on their own, either knowingly or unknowingly. They are putting watches on children and surveill them everywhere they go. They are installing apps on their phones to track where they go and what they do. Maybe we are being naive, but the idea of linking facial recognition technology with geolocation and government police powers is quite disconcerting.