Transcript

Often when we think of AI or algorithms, we think of something impartial and neutral, something that is simply acting based on pure facts. And this is one of the reasons why we humans have begun using AI to help us with more subjective evaluations and decisions. If we can remove human error from the decision-making, that would lead to a more just and better world, right?

But the reality tends to be that algorithms are not as neutral as many have come to hope. You know, this is because of a bias that gets programmed in because of cultural bias from a programmer, or from historical bias, that is somehow prejudiced in a certain way. Google AI’s chief, John Giannandrea, has said that his main concern regarding AI does not revolve around killer AI robots or terminator sort of things, but instead, he’s worried about the biases that he says, quote, “May be hidden inside algorithms “used to make millions of decisions every minute.”

First of all, what do we actually mean when we say that AI or an algorithm is biased? If you recall our talk about machine learning, a vital part of that revolves around the training of AI, training to see and follow patterns, by feeding it large amounts of information and data, training it to understand what success looks like, fine-tuning the results, reiterating and so forth. In this process, there is the possibility of human errors and prejudice integrating itself into the algorithm. So let’s take a look at an example.

In the past, if you were about to buy a home, you would typically meet in person with a mortgage officer at your local bank. You would visit their workplace, have a chat, and provide any relevant documentation. This person would then review your documentation, and later, they’d give you a result of whether the bank was going to lend you money or not.

For the lending officer, this would typically be a fairly subjective exercise, because the majority of home loan applicants fall in some level of a grey area, where there’s no definitive yes or no with respect to the loan, so they have some discretion.

With the recent advent of more advanced algorithms, and to increase efficiency, this process has been simplified for many banks, where the decision-making is now, to some extent, outsourced to AI, which makes the recommended loan application decision. By doing so, this process should be more accurate, objective and fair, right?

Well, not always. Amongst many studies that have been done, in particular, a recent study by the University of California found strong bias and discrimination by these AI lenders, such as charging anywhere from 11 to 17 percent higher interest rates to African-American and Latino borrowers. Additionally, minority applicants are more likely to be rejected than white applicants with a similar credit profile.

Lending discrimination is not something new, and has been reported on a lot in the past. The Washington Post, for another US example, uncovered widespread lending discrimination back in 1993, where they showed how various home-lending policies were negatively impacting minority residents.

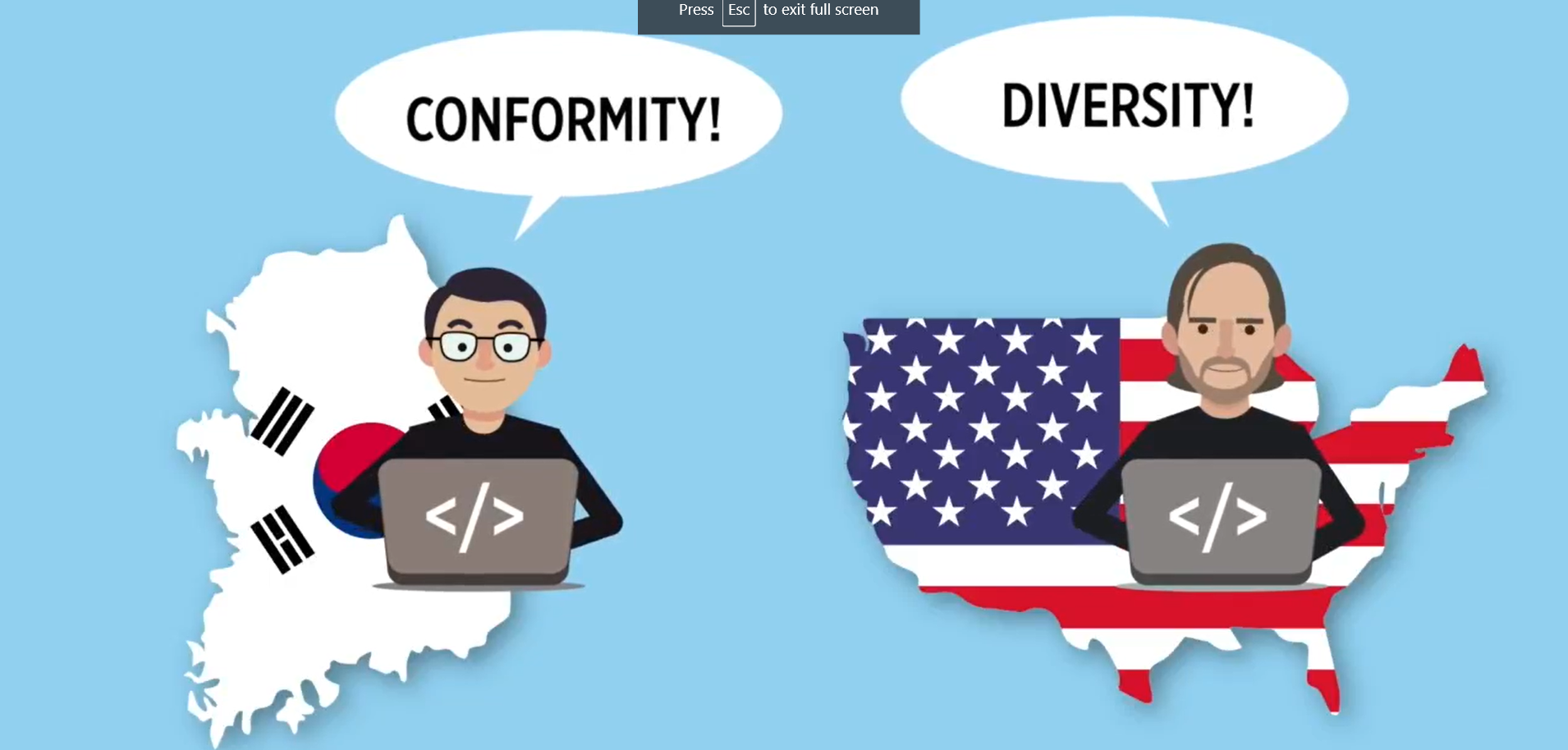

What further complicates the problem around AI bias is what people refer to as “black box” algorithms. You know, this is something similar to what we discussed earlier about opaque models, lacking transparency. Private companies are generally hesitant to open the door for other people to scrutinise what they’ve been doing. So how do we make an inclusive algorithm, when the data, its developers, and the organisations who hire them are seemingly not diverse and inclusive?

Overall, while algorithms are helpful, they may not make things as fair as we ideally would have hoped for, and we therefore have to be careful in blindly applying them, especially since they have a tendency to repeat past practices, repeat patterns, and automate the status quo.