Transcript

Does technology have the capability or potential to usher in a new era of utopian society for us?

We think so. Empirically you could look at the world right now and over the past 50-60 years, there’s been more progress in terms of most empirical measurements for what a good society would be than we’ve ever seen before. So FinTech also has the ability to usher in some of these changes that can make society even better, bring us closer together, and hopefully help with issues of scarcity, financial inclusion, etc. But we do think that there’s a legitimate question about whether this can happen in the existing structure with the businesses that we have today.

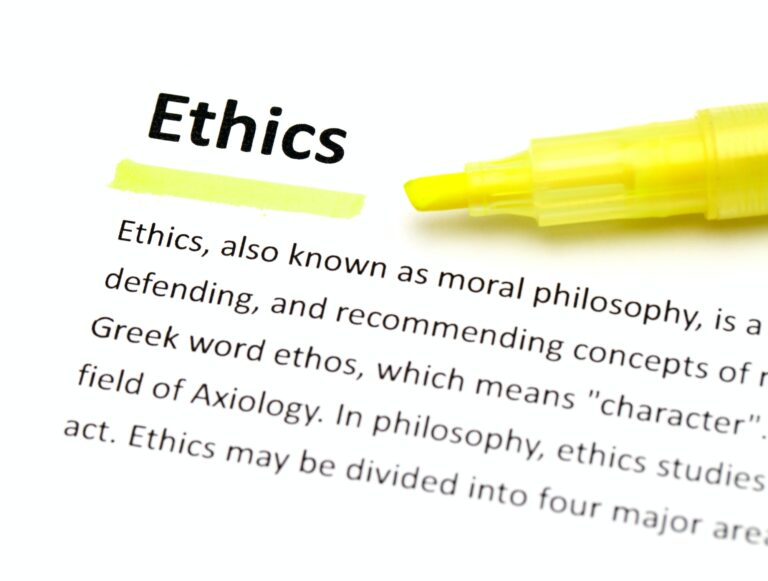

One of the fundamental questions that people have been asking for decades now is, do businesses have a social responsibility and whether should they be generating some good for society, or is there a responsibility simply to generate profits for shareholders. We think this is an underlying question that will determine the question of whether we can use technology to become a utopian society.

While many people nowadays think of this debate as a new movement, for example, the environmental, social and corporate governance, aka ESG factors, the reality is, that the debate around what the purpose of a company and business should be has been going on for at least a century, if not longer. Should it be for shareholders or should it be focused on stakeholders? Should pursuing a profit solely be the focus or should there be broader considerations?

In our opinion, such a debate is actually quite settled now in the major business world. Outside of some rare situations, there’s usually no legal requirement for most companies to pursue solely profit maximisation. Additionally, influential people have become advocates of moving beyond such pursuits. Larry Fink is the CEO of Black Rock, the largest independent asset manager in the world. By the very nature of the business that he does, he should be solely profit maximising. But over the past few years, he writes in his annual letters to CEOs, he’s been talking about the broader purpose that businesses and companies have to society, beyond just profit maximisation. And of course, there’s Jamie Dimon, and Warren Buffett, who have all signed on to these kinds of pledges to say the short-term maximisation of share price is not a good model.

We think, intellectually, we’ve moved beyond considering profit maximisation as the sole purpose. If you talk to any business leaders, or people in the field who have thought about the issue, they will all clearly say that a company should pursue other social benefits in addition to profitability. There should be a holistic perspective on how we approach this.

But the real rub is in how they actually accomplish that. There have been actual lawsuits where shareholders sued company executives for utilising what the shareholders believe to be their funds and profits for some inherent social purpose.

When we created the Massive Open Online Course, which this book is based on, we talked to people in the tech space about their perceptions of the broader impact of technologies. Who should be responsible for thinking about the utilisation of technologists? Should inventors, founders or CEOs actually be considering the positive and negative implications of technology? And the response is, surprisingly or not, that they’re not really thinking about that.

Of course, we are not pointing fingers at these business owners or startup founders. Ethics is not part of most engineering books or engineering programmes. Unlike business degrees, engineers typically aren’t required to study ethics programmes. In fact, Stanford has in recent years created a computer science ethics course which is a required component for their CS graduates. Many institutions are also trying to embed ethics into the curriculum, which we hope is a good thing for the matters we have discussed.

The interesting point about people creating new products, be it a new set of codes or some new technological applications, is that the question about what would the societal, ethical, or whatever public policy impact of this be, doesn’t usually come until after the product is put in use. To use an analogy, once you have a shiny new sports car in the garage, you normally wouldn’t think about whether you should drive it or if it is safe. You’re just gonna drive it because the temptation is just too high to resist.

And if you think about it in the context of new technology, drones would be one of them. People just started using them without considering any of their implications, until negative outcomes occurred.

So if a company has invested so much financial capital, human capital, and time capital into producing their equivalent of a shiny sports car, there’s going to be a lot of pressure to roll it out. Internally, if some enlightened folks say, “We need to think about this before we do it”, that’s where the real tension comes in. Because everyone else at that table will say, “Oh, this is an important thing to discuss, but let’s just roll it out first.” And if you imagine hundreds upon thousands of companies who are implementing these new technologies doing this across the world, we could end up in a really not-so-great place.